The edtech landscape is evolving quickly – the swift emergence and integration of generative AI and large language models (LLM) are revolutionizing education and learning methodologies. How can we balance our desire to innovate and incorporate emerging technologies with the need to ensure the accessibility, relevance, and effectiveness of these tools for students?

In our recent 2024 EdTech Evidence Report, we took a close look at how top-used edtech tools in the K12 space stack up using data aggregated from some of the industry’s most trusted third-party organizations that provide publicly accessible evidence across key domains. Our findings? Progress is being made, but we have a long way to go. Given how new and rapidly expanding AI is, we didn’t include it in the EdTech Evidence Report – but this doesn’t discount the need to consider the evidence associated with these tools.

In particular, as educators and students are spending more time using AI-based tools and interacting with AI-generated content, it’s imperative that we find ways to evaluate their effectiveness. We can't afford to treat evidence as secondary or pursue it through traditional, slow-paced methods. The need of the hour is to conceive innovative, expeditious channels to garner and disseminate all forms of evidence.

Developing ESSA-Aligned Evidence for an AI-Powered Reading Tutor

Although AI is new, some providers are ahead of the game and proactively pursuing evidence work for their tools – like Amira Learning.

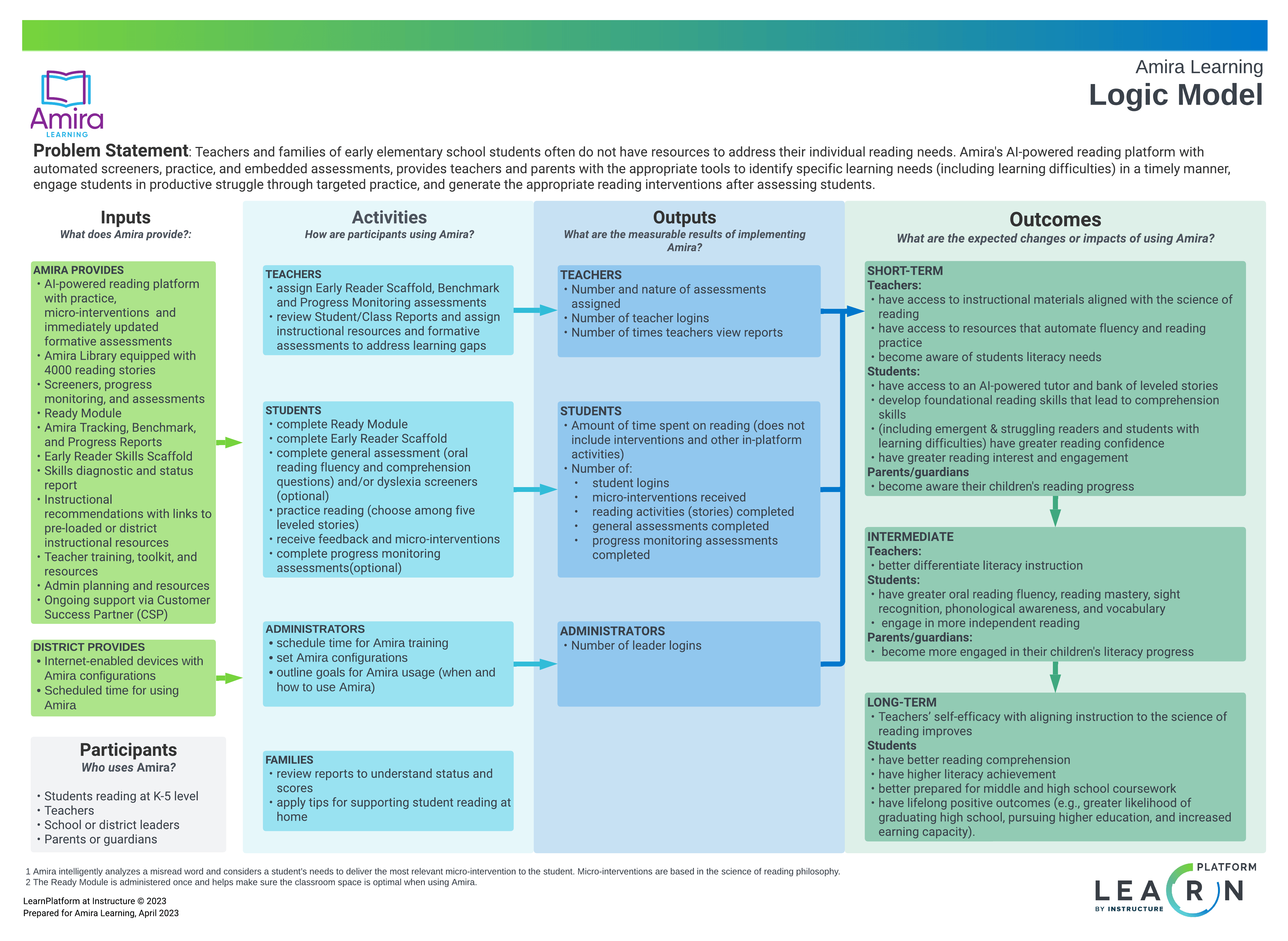

Recognizing the scarcity of resources for teachers and parents of early elementary students to cater to individual learning needs, Amira Learning introduced an AI-enabled reading platform. The platform administers screeners, facilitates targeted practice, carries out assessments, and generates pertinent reading interventions, thus empowering teachers and parents to promptly identify and address learning needs.

Amira Learning partnered with Learn Platform by Instructure to build a logic model for their AI-powered reading tutor. A logic model outlines a program's intended impact, transforming inputs into measurable activities that yield expected results. It consists of five core elements: inputs, participants, activities, outputs, and outcomes.

By demonstrating the connections between Amira’s technology and actions users can take within their platform, we were able to satisfy ESSA evidence requirements for Level IV (Demonstrates a Rationale) and recognize Amira Learning with an ESSA Evidence Badge. LearnPlatform’s ESSA Evidence Badges streamline the edtech vetting process by highlighting a solution’s evidence base and research commitment.

Looking Ahead to the Future

Considering our findings, we need to address the rapidly evolving relationship between evidence and these AI tools. These new-gen tools, which can disrupt current models, bring the dimensions of data privacy and security into sharp focus. In light of this, we are geared up to face this challenge owing to our unique formative approach.

We strive to generate meaningful results for educators through a rigorous and expeditious response to changes. In the face of rapid evolution, the question becomes: How do we effectively evaluate these tools? The key lies in addressing aspects including accessibility, data privacy, security, curriculum and content, and research.

AI in edtech is a rapidly changing frontier. Ensuring adequate evaluation of these tools requires new tactics, protocols, and broad-spectrum thinking. At Instructure, we understand the need and are fully prepared to spearhead this effort. As thought leaders in the edtech space, we are excited to carve out new avenues of growth, exploration, and advancement in AI effectiveness. Now, more than ever, we consider it both a responsibility and an opportunity to leverage our expertise to contribute meaningfully to the ever-evolving landscape of digital education.